We are in the process of writing this documentation.

This is the multi-page printable view of this section. Click here to print.

Developer's guide

- 1: Getting started

- 2: Single server deployment

- 3: System Overview

- 3.1: Persistence

- 3.2: Tiled Imagery

- 3.3: Event Handling/Communication

- 4: Contribution guidelines

1 - Getting started

2 - Single server deployment

This document describes a Janelia Workstation deployment intended for setting up a personal development environment. Unlike the canonical distributed deployments which use Docker Swarm, this deployment uses Docker Compose to orchestrate the services on a single server.

Note that this deployment does not build and serve the Workstation client installers, although that could certainly be added in cases where those pieces need to be developed and tested. In most cases, however, it is expected that this server-side deployment be paired with a development client built and run directly from IntelliJ or NetBeans.

System Setup

This deployment should work on any system where Docker is supported. Currently, it has only been tested on Scientific Linux 7 and macOS Mojave.

To install Docker and Docker Compose on Oracle Linux 8, follow these instructions.

Clone This Repo

Begin by cloning this repo:

git clone https://github.com/JaneliaSciComp/jacs-cm.git

cd jacs-cm

Configure The System

Next, create a .env.config file inside the jacs-cm directory. This file defines the environment (usernames, passwords, etc.) You can copy the template to get started:

cp .env.template .env.config

vi .env.config

At minimum, you must customize the following:

- Configured the

UNAMEandGNAMEto your liking. Ideally, these should be your username and primary group. - Setup

REDUNDANT_STORAGEandNON_REDUNDANT_STORAGEto point to directories accessible byUNAME:GNAME. If you want to use the defaults, you may need to create these directories and set the permissions yourself. - Set

HOST1to the hostname you are deploying on. If possible, use a fully-qualified hostname – it should match the SSL certificate you intend to use. - Fill in all the unset passwords with >8 character passwords. You should only use alphanumeric characters, special characters are not currently supported.

- Generate 32-byte secret keys for JWT_SECRET_KEY and MONGODB_SECRET_KEY.

Enable Databases (optional)

Currently, Janelia runs MongoDB outside of the Swarm, so they are commented out in the deployment. If you’d like to run the databases as part of the swarm, edit the yaml files under ./deployments/jacs/ and uncomment the databases.

Initialize Filesystems

The first step is to initialize the filesystem. Ensure that your REDUNDANT_STORAGE (default: /opt/jacs), NON_REDUNDANT_STORAGE (default: /data) directories exist and can be written to by your UNAME:GNAME user (default: docker-nobody).

If you are using Docker for Mac, you’ll need to take the additional step of configuring share paths at Docker -> Preferences… -> File Sharing. Add both REDUNDANT_STORAGE and NON_REDUNDANT_STORAGE and then click “Apply & Restart” to save your changes.

Next, run the filesystem initialization procedure:

./manage.sh init-local-filesystem

You should see output about directories being created and initialized. If there are any errors, they need to be resolved before moving further.

Once the initialization is complete, you can manually edit the files found in CONFIG_DIR. You can use these configuration files to customize much of the JACS environment.

SSL Certificates

At this point, it is strongly recommended is to replace the self-signed certificates in CONFIG_DIR/certs/* with your own certificates signed by a Certificate Authority:

sudo cp /path/to/your/certs/cert.{crt,key} $CONFIG_DIR/certs/

sudo chown docker-nobody:docker-nobody $CONFIG_DIR/certs/*

If you use self-signed certificates, you will need to set up the trust chain for them later.

External Authentication

The JACS system has its own self-contained authentication system, and can manage users and passwords internally.

If you’d prefer that users authenticate against your existing LDAP or ActiveDirectory server, edit $CONFIG_DIR/jacs-sync/jacs.properties and add these properties:

LDAP.URL=

LDAP.SearchBase=

LDAP.SearchFilter=

LDAP.BindDN=

LDAP.BindCredentials=

The URL should point to your authentication server. The SearchBase is part of a distinguished name to search, something like “ou=People,dc=yourorg,dc=org”. The SearchFilter is the attribute to search on, something like “(cn={{username}})”. BindDN and BindCredentials defines the distinguished name and password for a service user that can access user information like full names and emails.

Start All Containers

Now you can bring up all of the application containers:

./manage.sh compose up -d

You can monitor the progress with this command:

./manage.sh compose ps

Initialize Databases

If you are running your own databases, you will need to initalize them now:

./manage.sh init-databases

Verify Functionality

You can verify the Authentication Service is working as follows:

./manage.sh login

You should be able to log in with the default admin account (root/root), or any LDAP/AD account if you’ve configured external authentication. This will return a JWT that can be used on subsequent requests.·

If you run into any problems, these troubleshooting tips may help.

Updating Containers

Containers in this deployment are automatically updated by Watchtower whenever a new one is available with the “latest” tag. To update the deployment, simply build and push a new container to the configured registry.

Stop All Containers

To stop all containers, run this command:

./manage.sh compose down

Build and Run Client

Now you can checkout the Janelia Workstation code base in IntelliJ or NetBeans and run its as per its README.

The client will ask you for the API Gateway URL, which is just http://$HOST1. In order to automatically connect to your standalone gateway instance, you can create a new file at workstation/modules/Core/src/main/resources/my.properties with this content (replacing the variables with the values from your .env.config file):

api.gateway=https://$HOST1

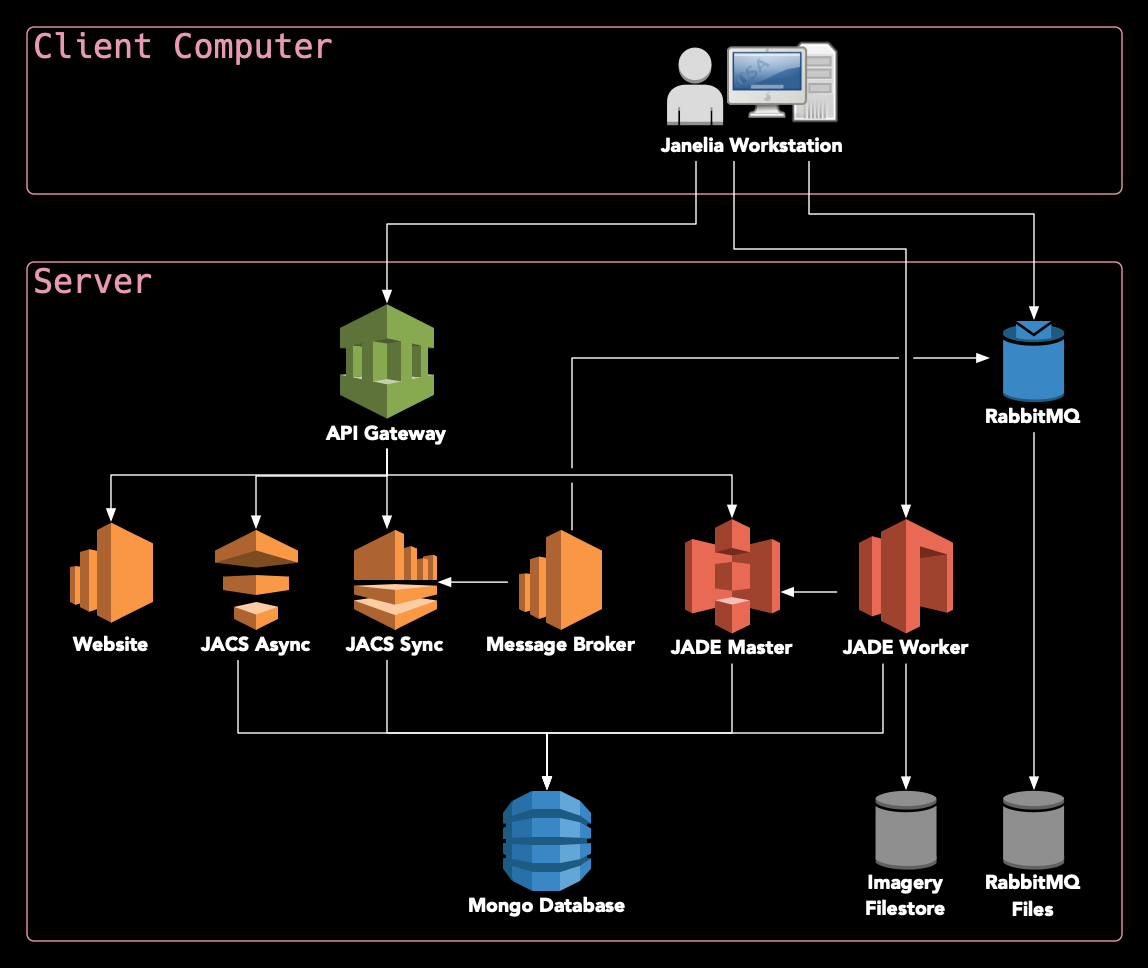

3 - System Overview

We are in the process of writing this documentation.

3.1 - Persistence

This section covers a basic overview of how Horta persists neurons, workspaces, samples and preferences.

Asynchronous vs synchronous saving

The final persistence store for Horta is in MongoDB, a document database, whether or not the data being saved is asynchronous or synchronous. Most Horta data is saved synchronously; the purpose of the asynchronous saving is to:

Ease the burden on the annotator to constantly save their workShare annotations in realtime with other annotators or machine algorithms working in the same workspace

Persistence flow

All access to MongoDB is wrapped by a RESTful API, jacs-sync, with a number of endpoints for CRUD operations for TmWorkspace, TmSample, and TmNeuronMetadata. The basic class/component diagram is shown below. When a workspace is first loaded, it relies on NeuronModelAdapter to stream in parallel all the neuron annotations for that workspace into the client app. The following initialization steps are performed with the annotation data:

Concurrent map in NeuronModel is populated:LoadWorkspace/Sample events are fired to notify relevant GUI panels to updateSpatial Filters are initialized with vertices (TmGeoAnnotations) from the neurons for snapping and general neighborhood convenience methods

Once the workspace is initialized and the annotator makes a change to a neuron, the entire neuron is serialized into an AMQP message and sent through the NeuronModelAdapter using RabbitMQ. When a client first starts up, it creates a temperorary queue that both publishes to a RabbitMQ topic exchange (ModelUpdates). Another service, jacs-messaging, listens to this exchange using the UpdateProcessor queue, and consumes messages of the exchange. It determines how to process these messages and if it requires persisting the neuron to the db, connects over the REST API to the jacs-sync service to perform the operation.

Once the operation is complete, an acknowledgement/update message is posted on the RabbitMQ fanout exchange, ModelRefresh. If there are any errors, they get posted on the ModelError exchange. All the clients are subscribed the ModelRefresh exchange, so they get notifications on any neuron changes. These are processed as multi-threaded callbacks in RefreshHandler, which filters based off the current workspace and fires update events if the message is relevant.

3.2 - Tiled Imagery

Horta 2D

Horta’s 2D and 3D viewer were originally 2 separate visualization packages, so the way that they load tile imagery is quite different. Horta2D was called LVV (Large Volume Viewer) and exclusively loads many 2D slices from a TIFF Octree at a specific resolution to produce a mosaic that fills the window. These 2D slices are retrieved from the jacs-sync REST API based off the current focus on the window.

In the LVV Object Diagram above, the key classes to examine when dealing specifically with the UI are QuadViewUI, TraceMode, AnnotationPanel, SkeletonActorModel, and AnnotationManager. QuadViewUI is the main Swing container for LVV, wrapping the 3D viewer and AnnotationPanel. The AnnotationPanel is the Swing panel on the right of the layout that has all the menu options, neuron filter options and annotation lists for each neuron. TraceMode is the main User EventManager, handling all key presses and mouse clicks. The SkeletonActorModel is the Controller for all the Neuron tracing (anchors/vertexes and directed paths between them).

Horta2D has a different tile-loading mechanism, but shares all other data with Horta3D through TmModelManager, including the current focus location, selection, resolution, voxel-micrometer mapping, etc.

Horta 3D

Horta3D loads 3D tiles as 3D texture-mapped chunks of the type of imagery (KTX, TIFF, MJ2, Zarr) associated with the current workspace/sample. These chunks all inherit from GL3Actor (TetVolumeActor, BrickActor), and are all loaded into a collection that is rendered by NeuronMPRenderer in its VolumeRenderPass. The main JOGL OpenGL window is SceneWindow.

When Horta3D is first loaded, the focus location is initialized from the sample bounding box and center information that is calculated on scene/workspace loading. The top component of Horta3D, NeuronTracerTopComponent, then delegates to the NeuronTileLoader to find the appropriate 3D chunks for that view. The BrickSource objects are used to load 3D voxel data and convert it into a 3D object that can be rendered in the SceneWindow.

In the case of KTX, the TetVolumeActor and KTXBlockLoadRunner work together to queue up a number of 3D chunks for multi-threaded loading based off the zoom resolution and current focus in the SceneWindow. The intent is to load as many chunks are necessary to fill up the viewport.

3.3 - Event Handling/Communication

This section briefly covers how information is shared throughout the GUI in Horta

EventBus

To preserve code independence between different modules in Horta, an EventBus from Google is used. This acts likes an internal publish/subscribe framework within the client, allowing methods to listen for certain events to happen. Events are sent asynchronously on the EventBus, so they are managed in a separate thread than the main AWT thread.

In Horta, the EventBus is mainly used to communicate events that concern multiple GUI components. Events that are mostly concerned with communication within a GUI component are still managed using Listeners.

ViewerEventBus is the main wrapper around the eventbus for Horta, and is managed by TmViewerManager.- `Each main component has a Controller class that is the main hub for incoming eventbus messages (eg, PanelController for the Horta Command Center). Messages are then appropriately routed to local GUI components from the controller.

All the eventbus events are listed in the org.janelia.workstation.controller.eventbus package and include events such as SampleCreateEvent, WorkflowEvent, etc. Because events can have inheritance, methods can listen to the super Event class and get notifications on the whole stack of events that inherit from the super class.